Autonomous agents for multiplayer SuperTuxCart

March 2023

Team: Autonomous agents for multiplayer SuperTuxCart

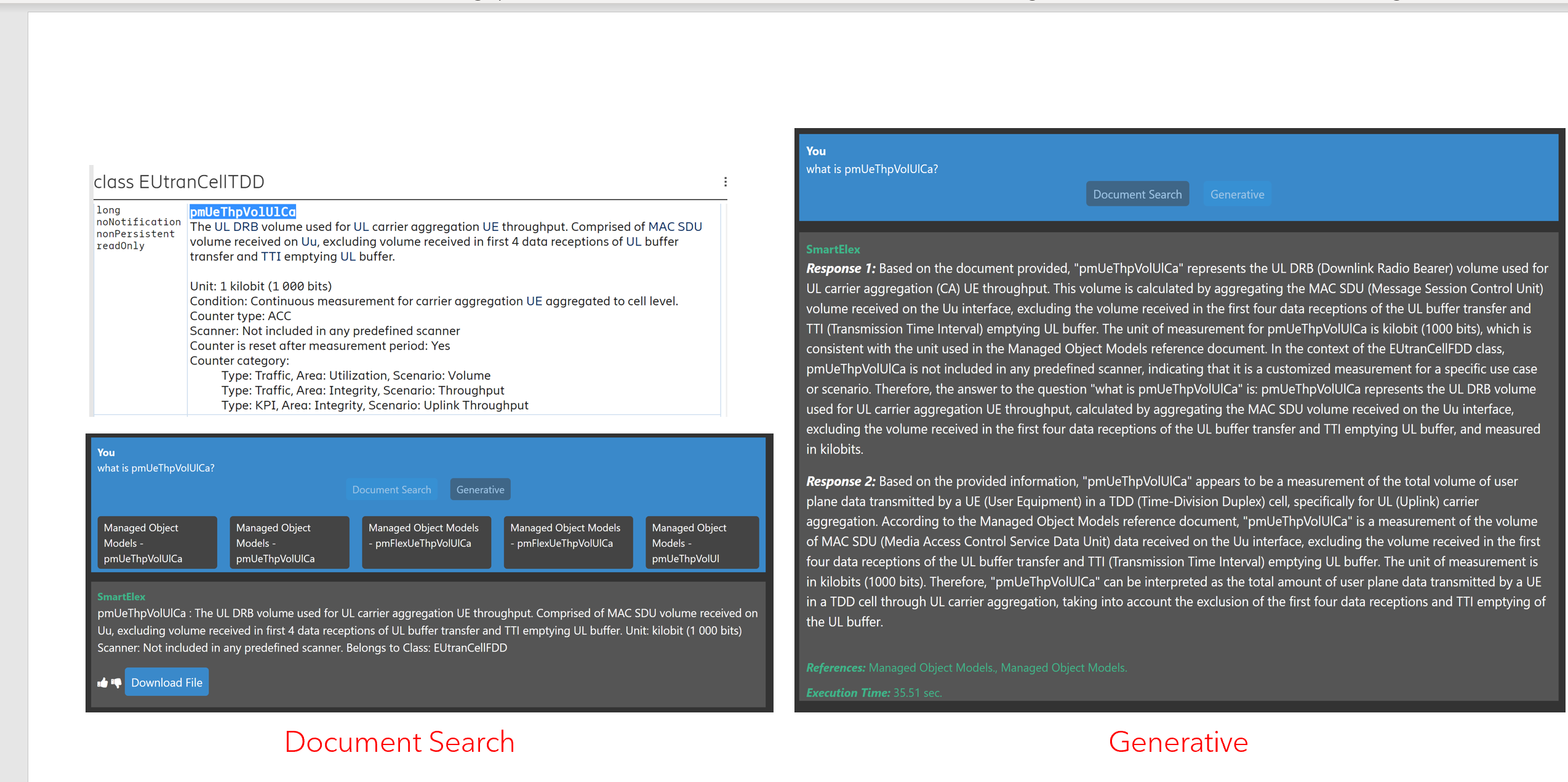

Resources: [Technical report]

Summary:

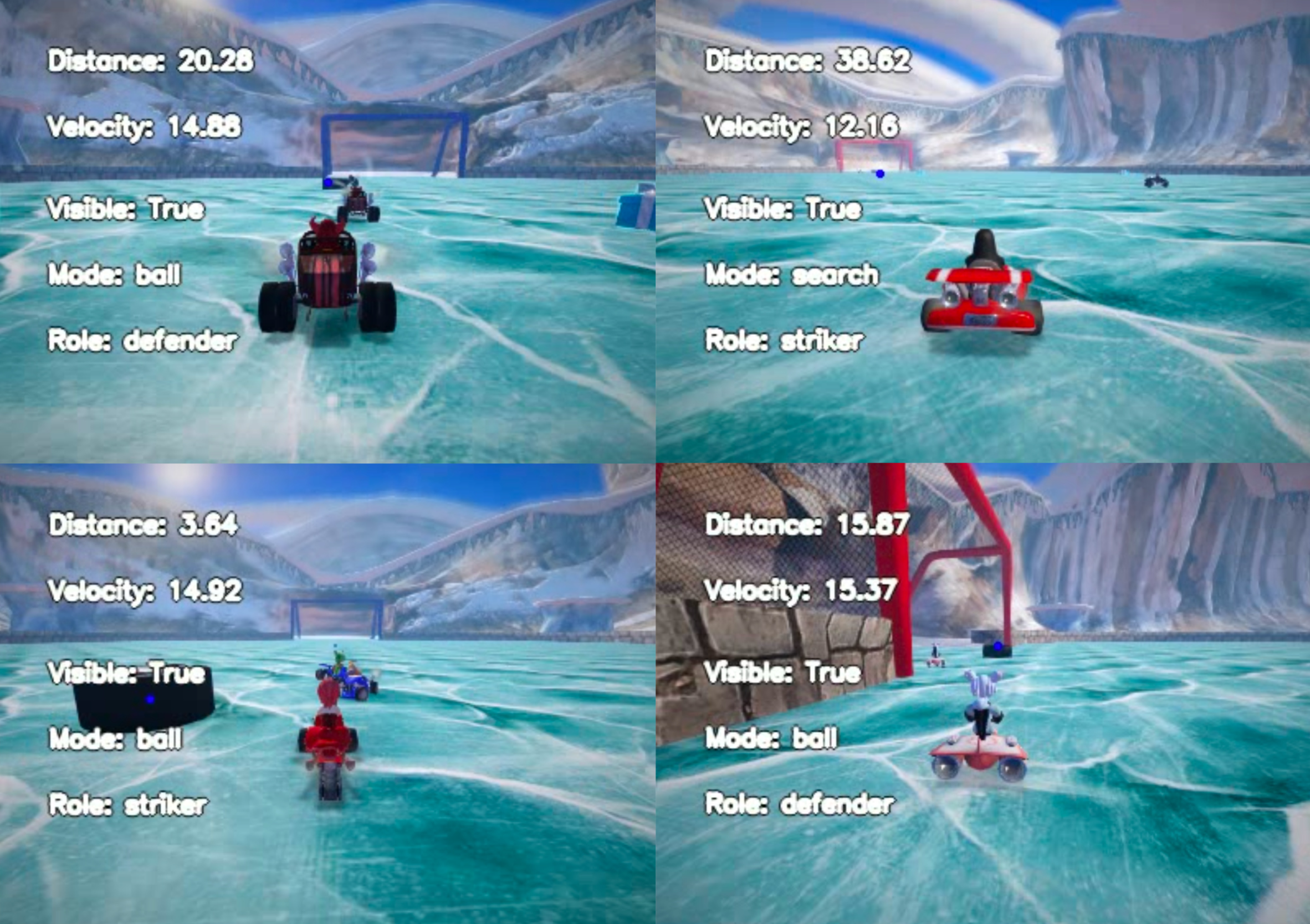

Project Goal: The goal of this project was to train an AI agent to play SuperTuxKart, competing with other trained agents in a 2v2 hockey game.

Project Description:

1) Approach:

i) Reinforcement Learning: Initially, we used Q-Learning for training. However, it struggled to learn optimal strategies.

ii) Imitation Learning: To overcome this, we trained the model using imitation learning on winning strategies derived from over 2000 games.

iii) Hybrid Model: We then improved the reinforcement learning model by using the trained imitation model for initialization. This helped further refine the agent’s strategy.

2) Future Work:

i) Data Augmentation: Incorporate data augmentation during training to randomize initialization and improve robustness.

ii) Internal State Controller: Explore the design of a hand-crafted internal state controller to compare its effectiveness against AI models.

Outcome: Our project successfully demonstrated the application of a hybrid approach, combining imitation learning with reinforcement learning, to train an AI agent for SuperTuxKart. The refined agent showed improved performance in minimizing player-puck and puck-goal distances, paving the way for future enhancements and comparative studies with hand-crafted controllers. This project consisted of training an agent to play SuperTuxKart to compete with the other trained agents in a 2v2 hockey game. Our strategy was to utilize reinforcement learning to minimize the player-puck and puck-goal distance. Our initial attempt at using Q Reinforcement Learning had trouble learning optimal strategies. Imitation Learning was then used to train the model on the winning strategies of over 2000 games. Our reinforcement learning model was then improved by using the imitation model as its initialization in order to further refine the agent’s strategy. Future work can involve utilizing data augmentation during training in order to randomize initialization. A hand-crafted internal state controller could also be designed to see if it is superior to the AI models.

My contribution: Generated about 2000 games in order to train the imitation learning model implementation. Worked on the Q-reinforcement learning algorithm that trained on the imitation model in order to create the final agent